TL;DR: DiffuMatting is a versatile model, capable of generating any object and providing high-precision matting-level annotations. This ensures its broad applicability, making it a valuable tool in various downstream tasks.

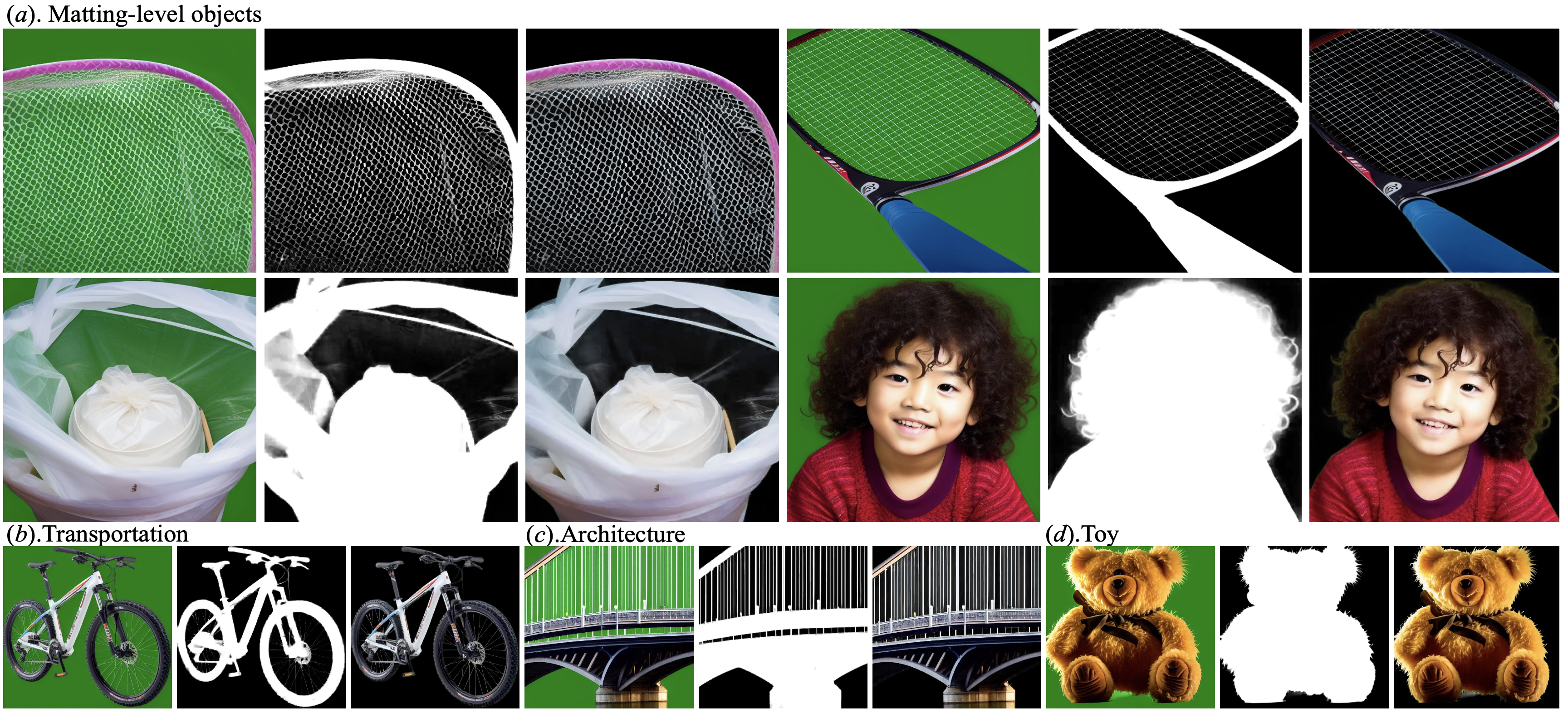

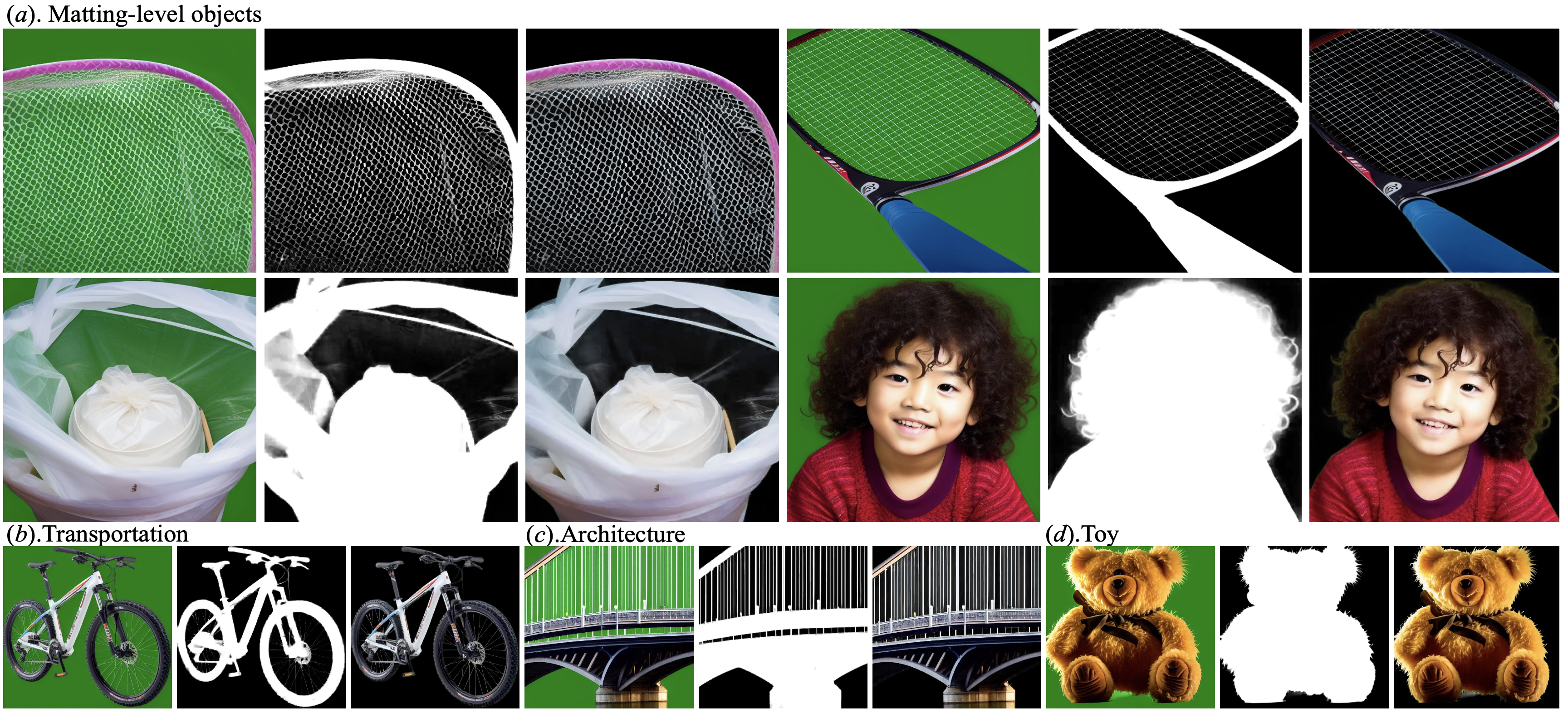

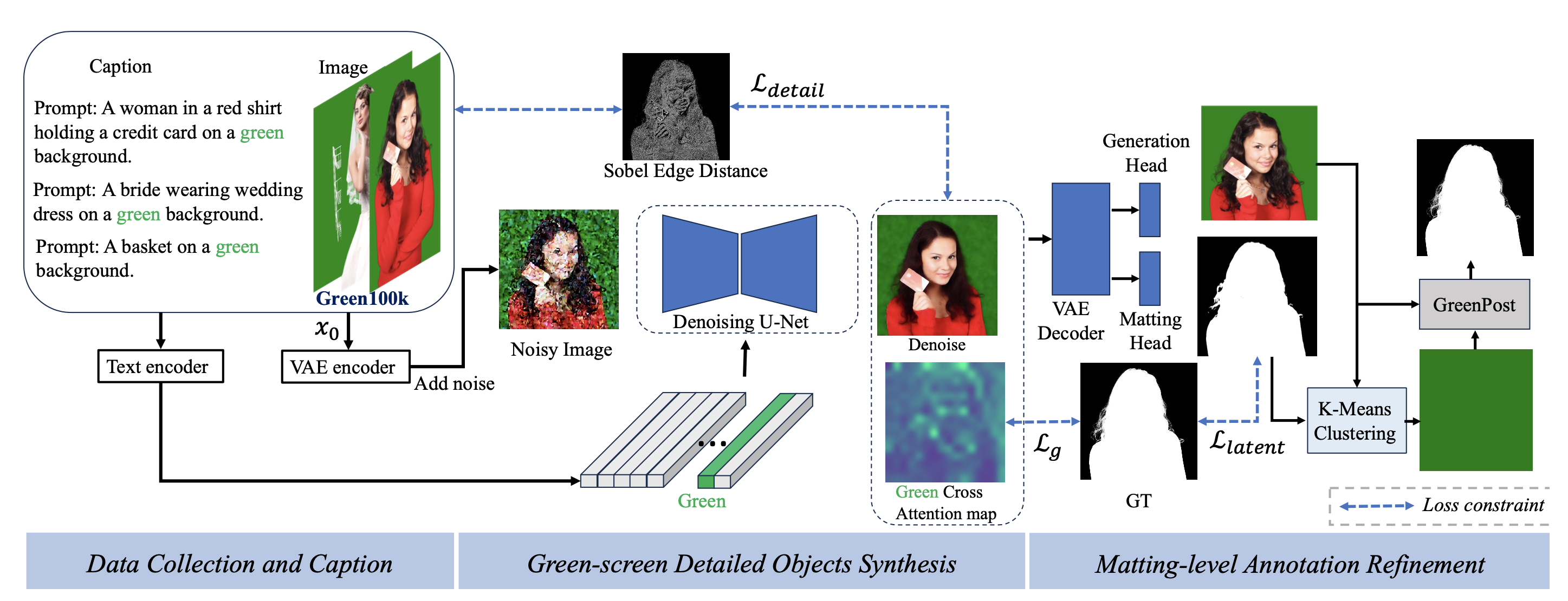

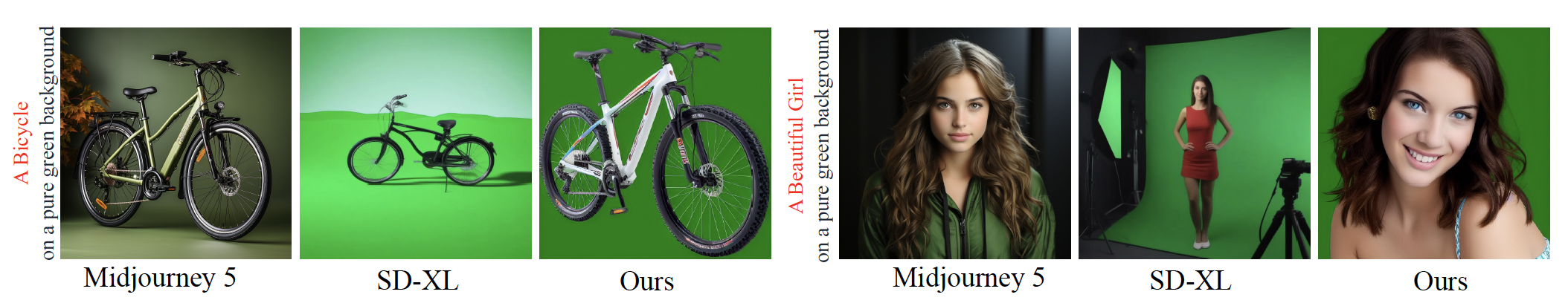

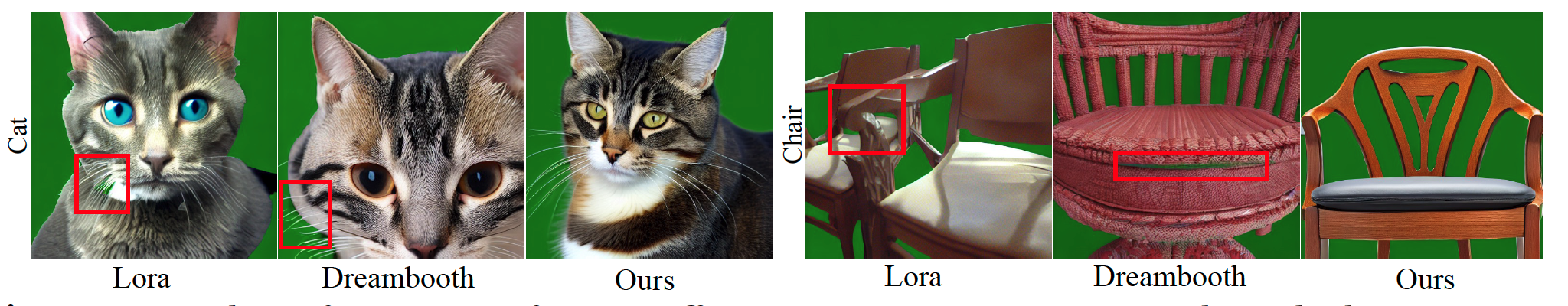

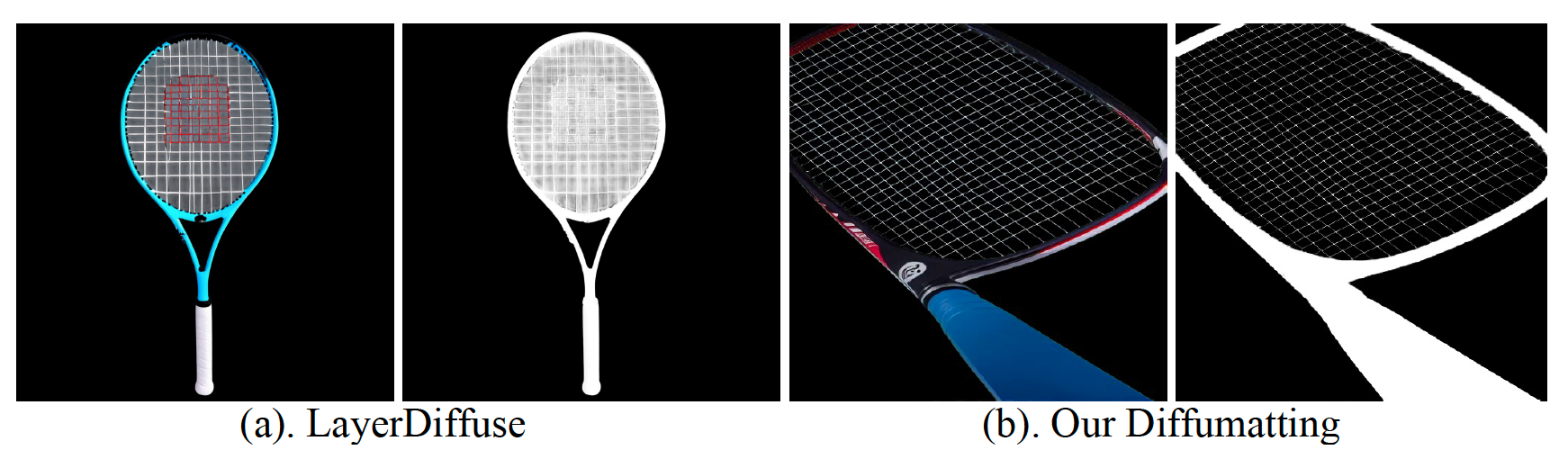

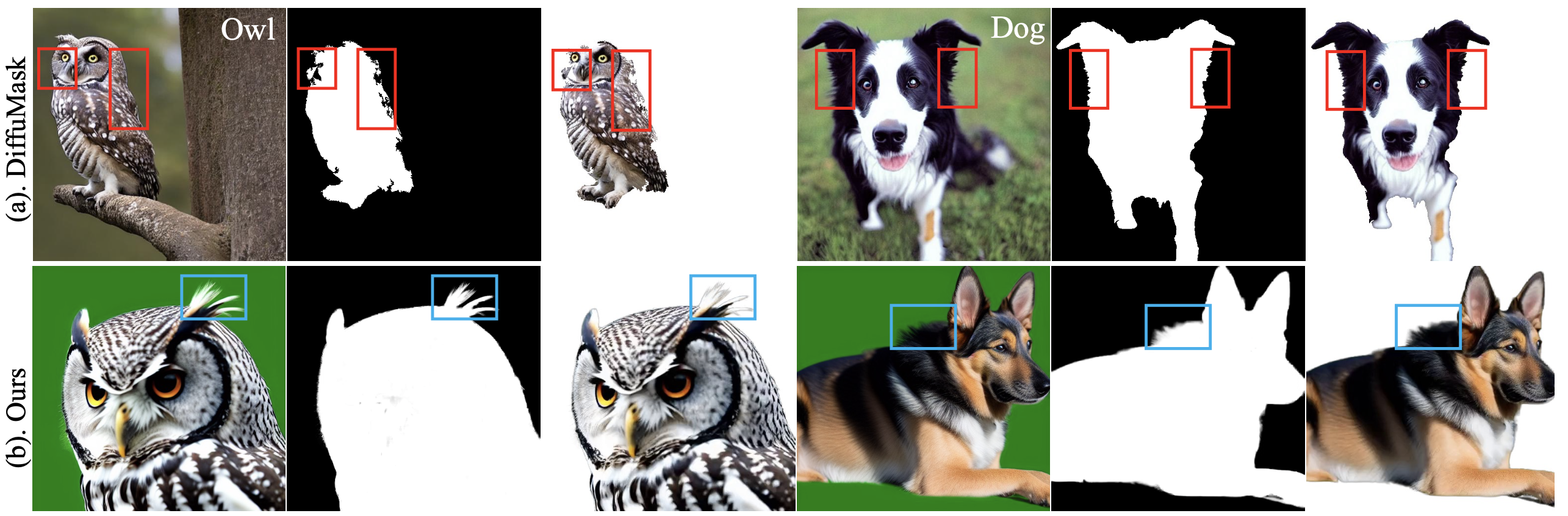

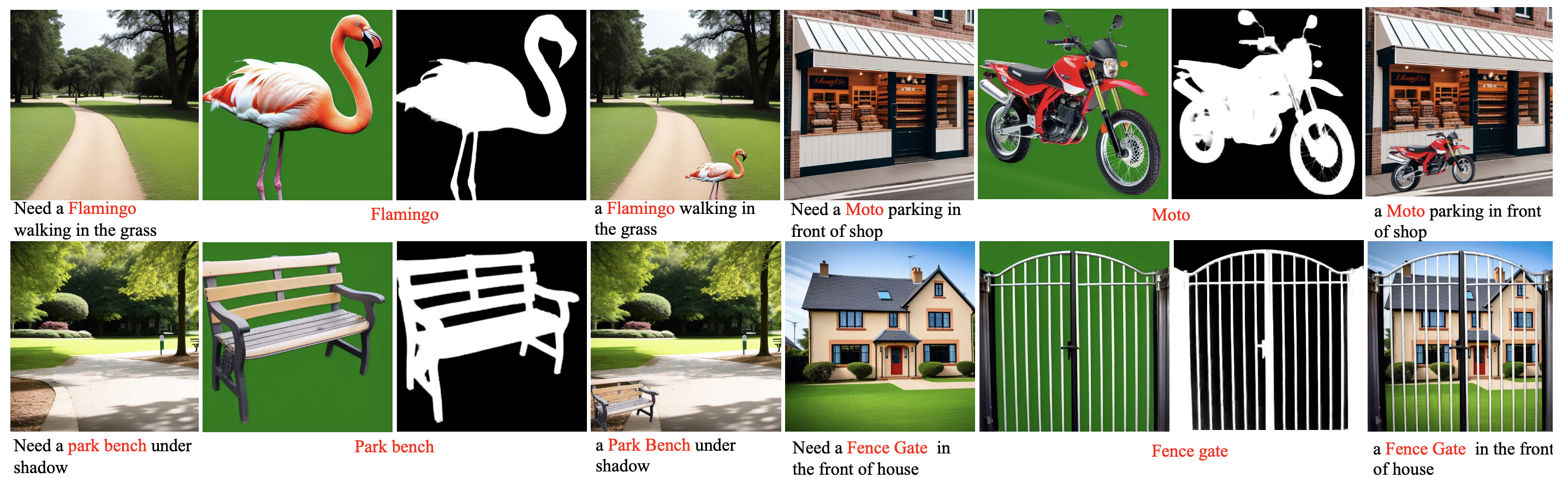

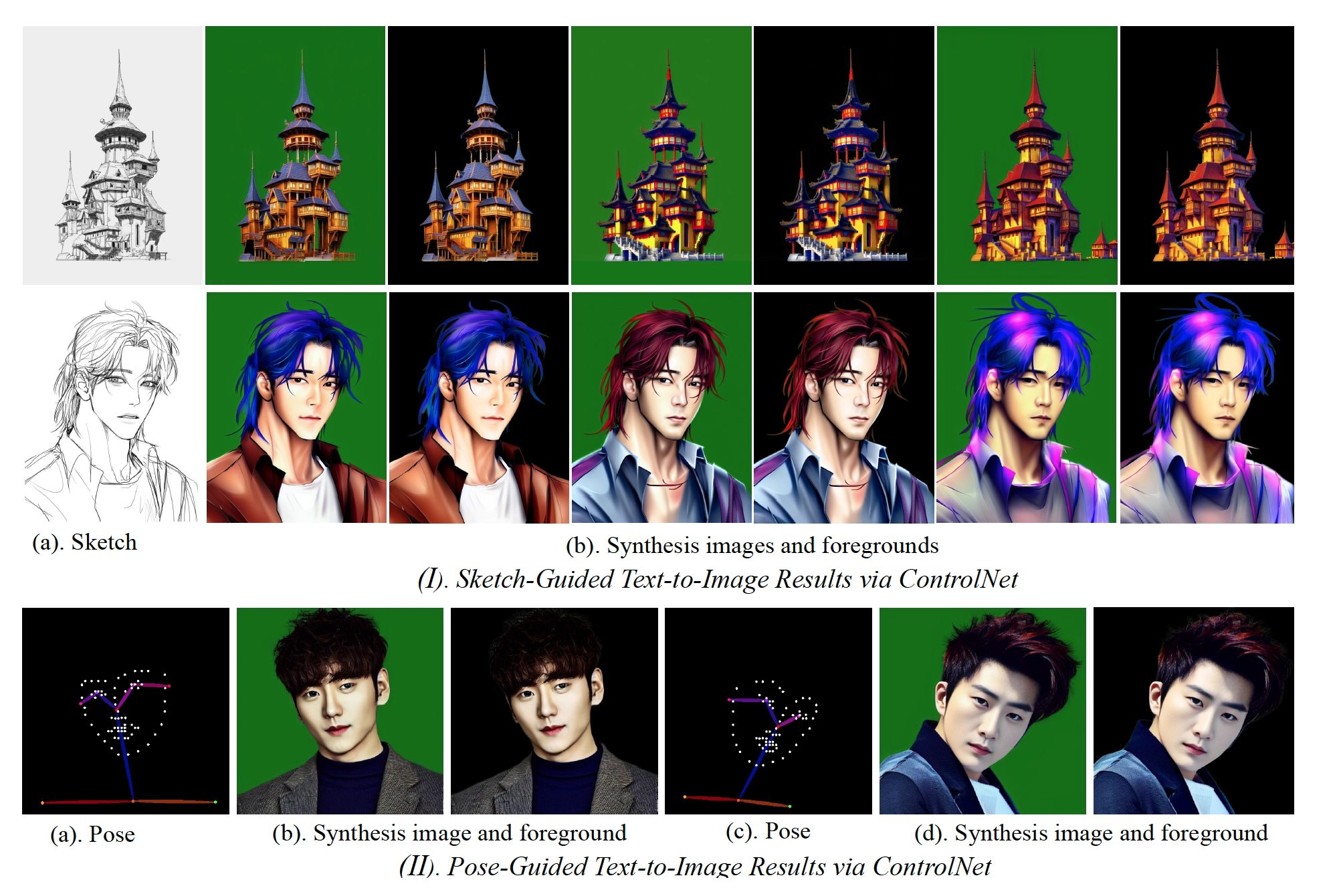

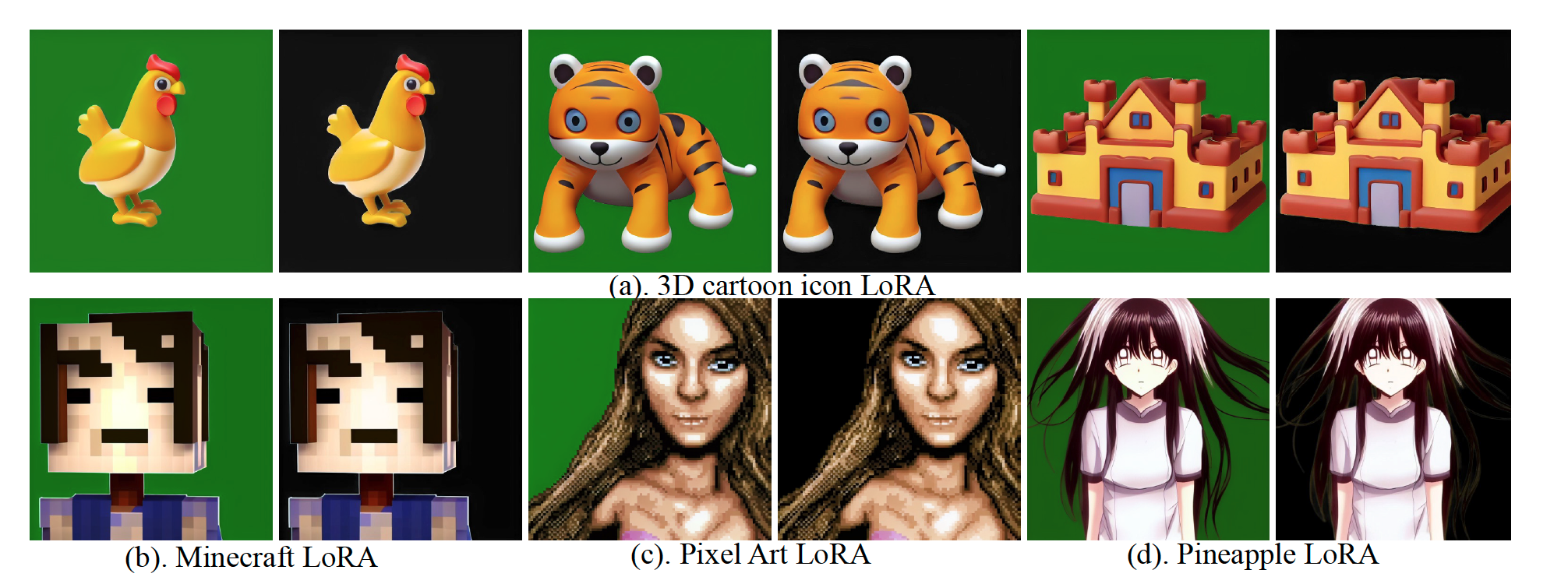

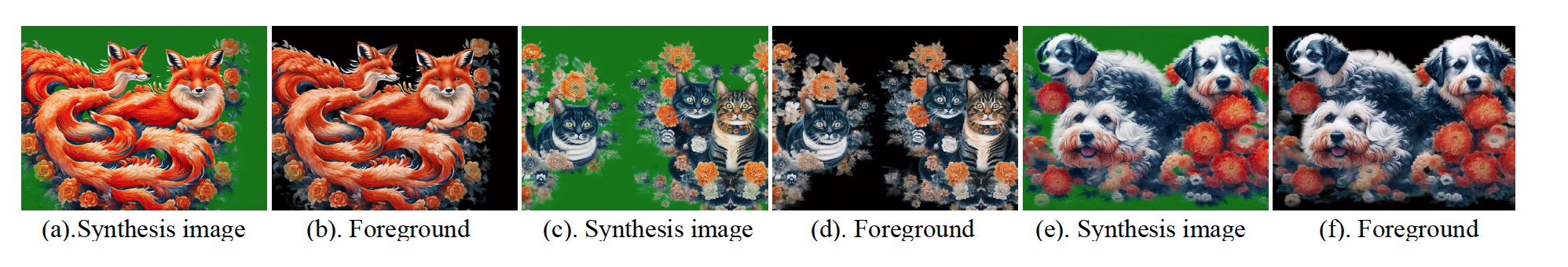

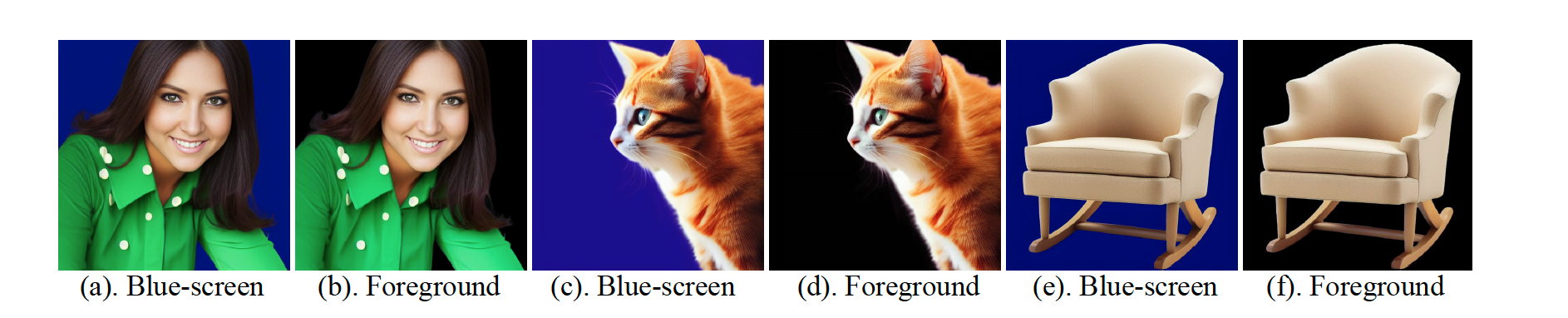

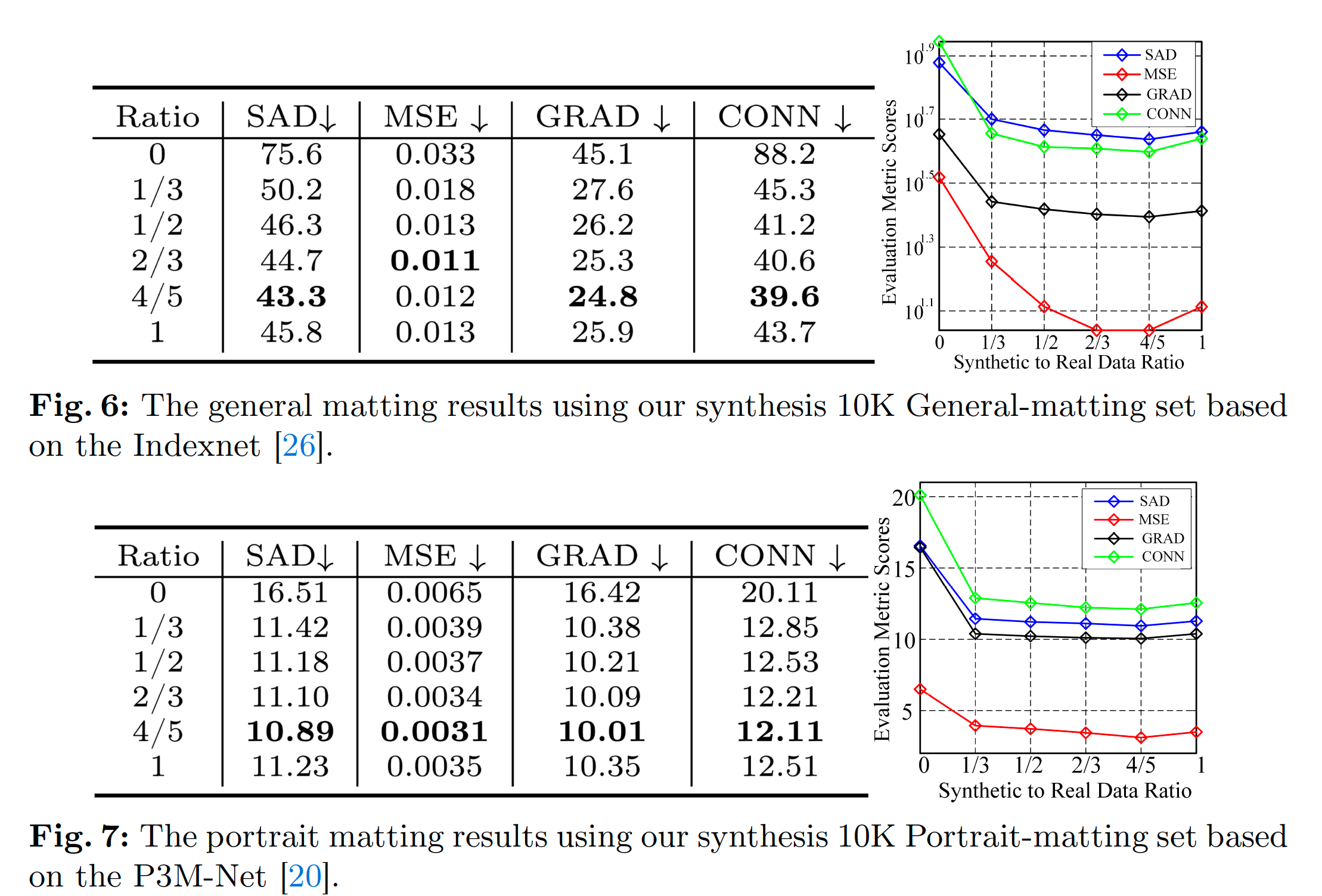

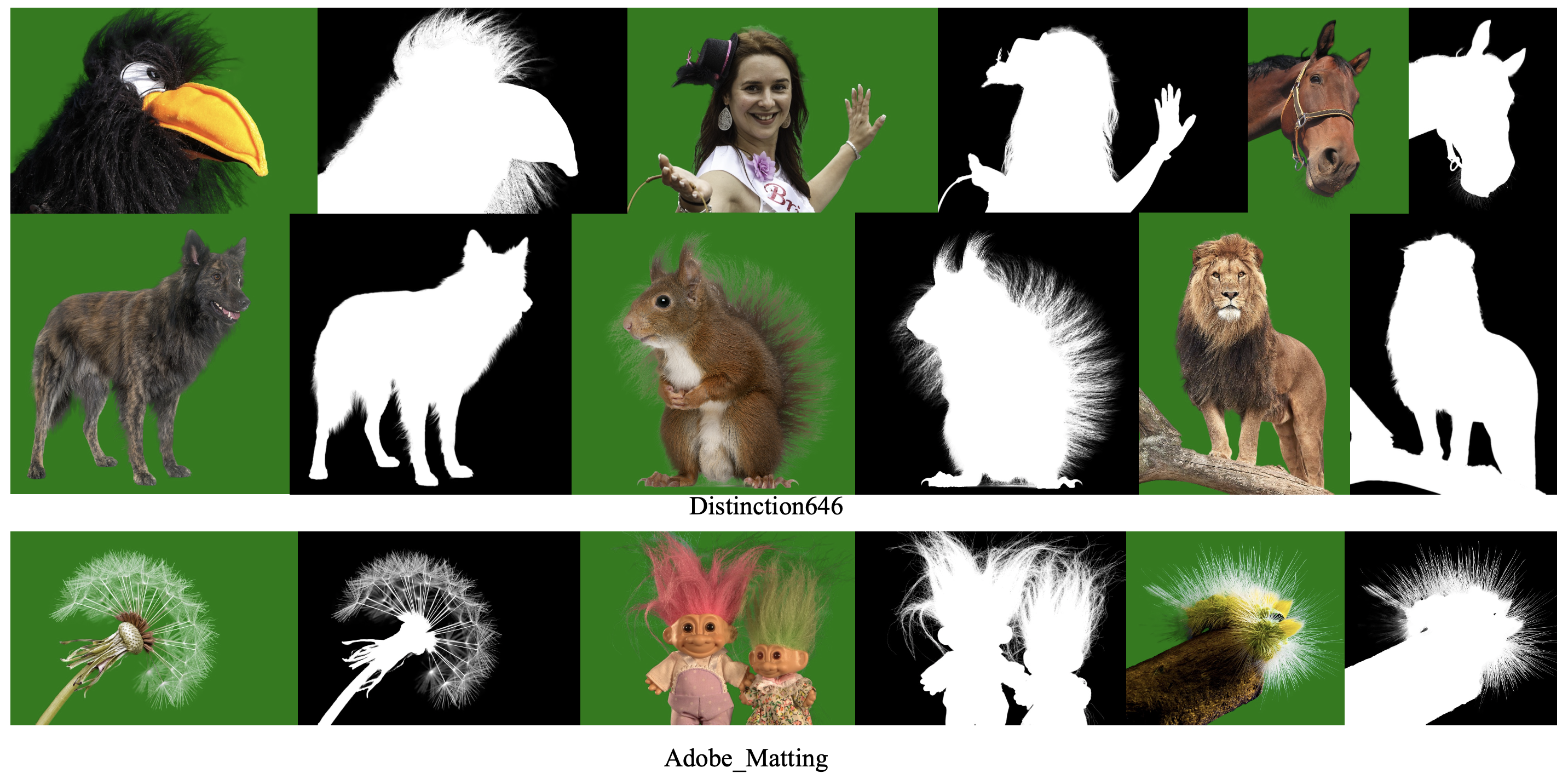

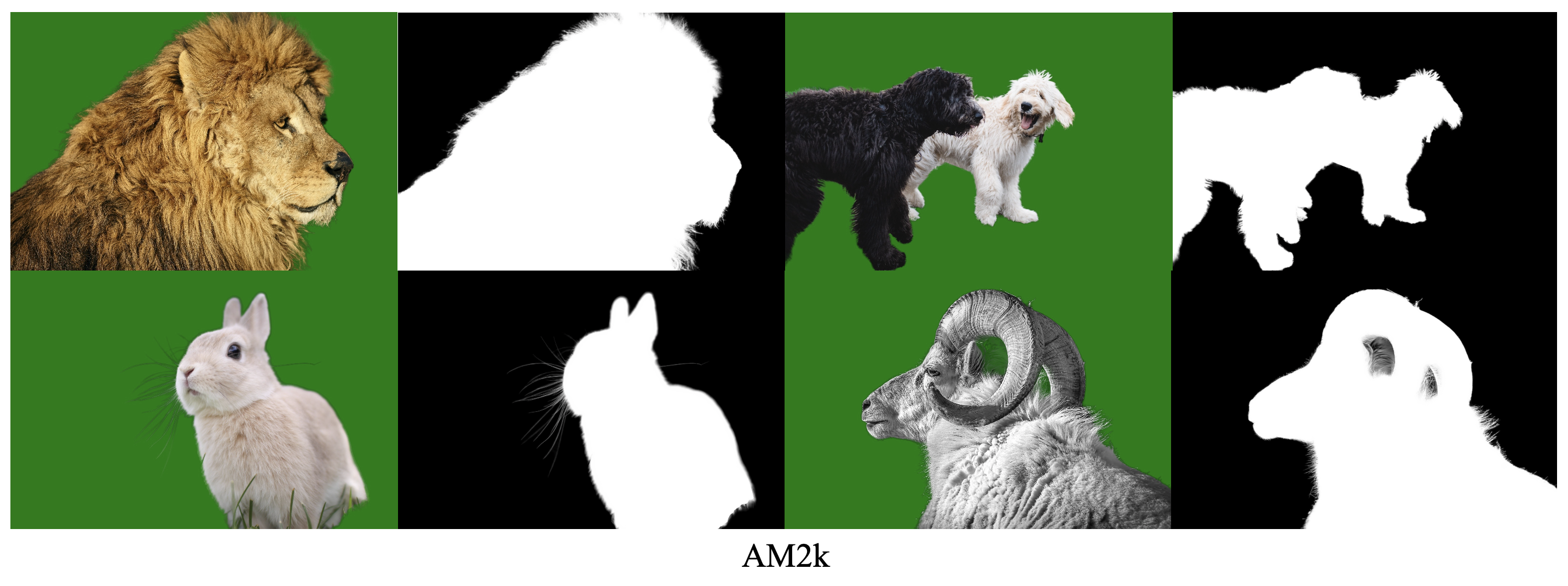

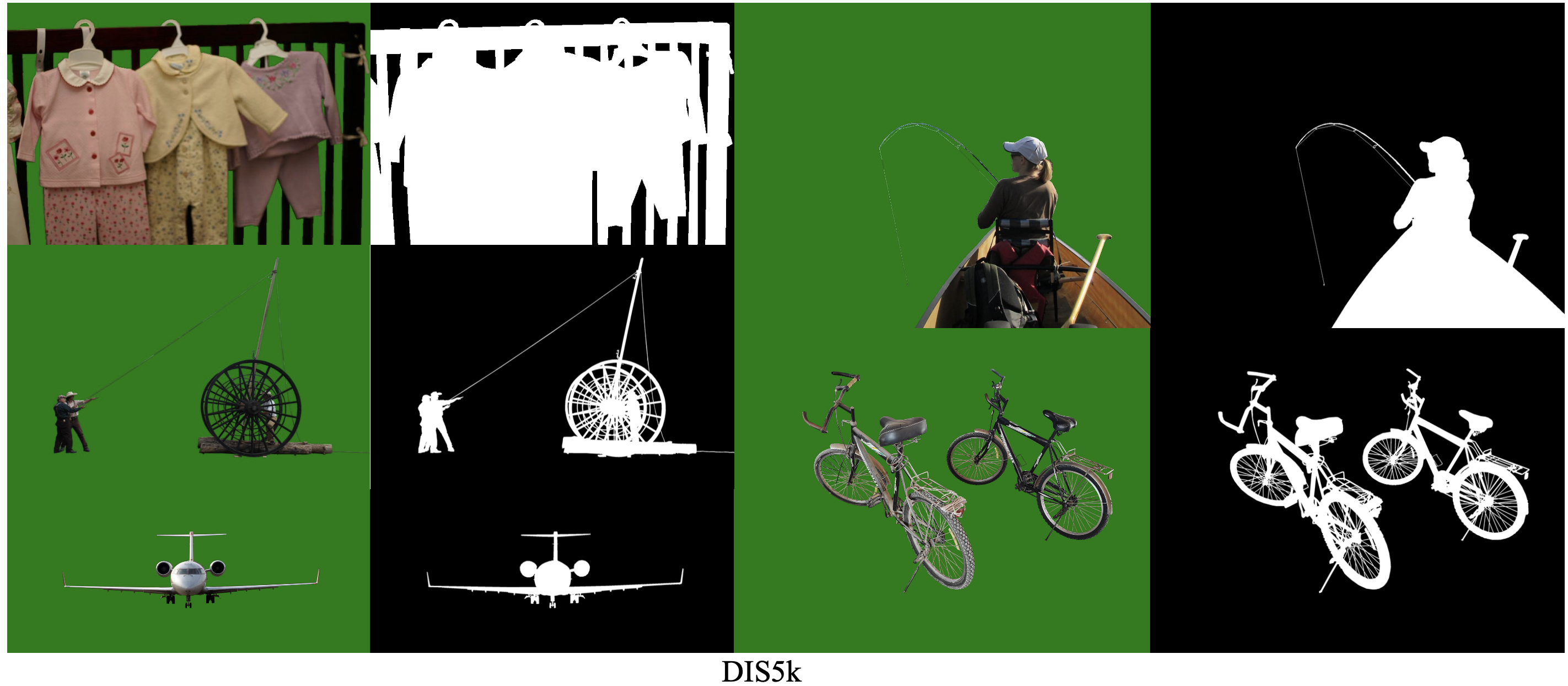

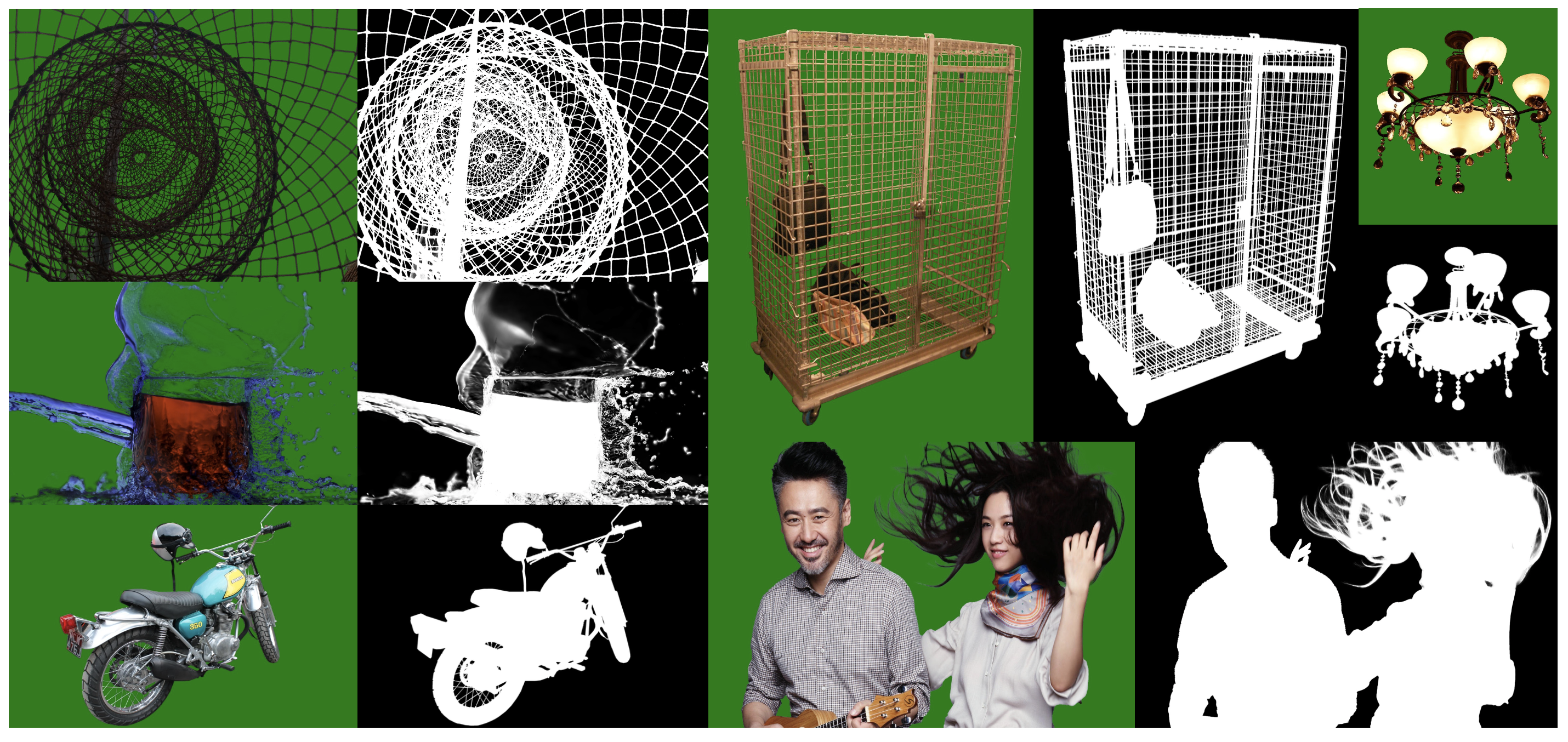

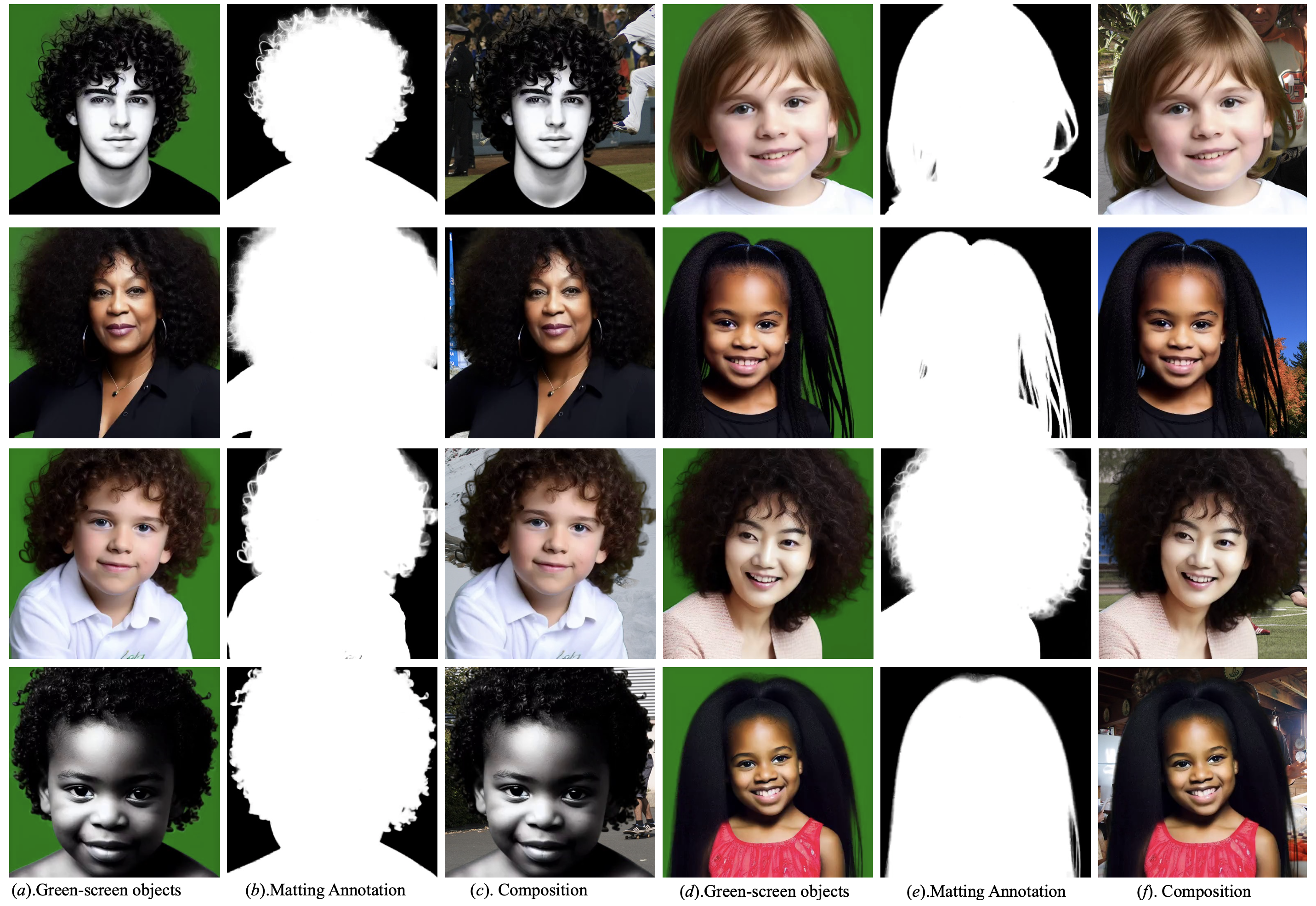

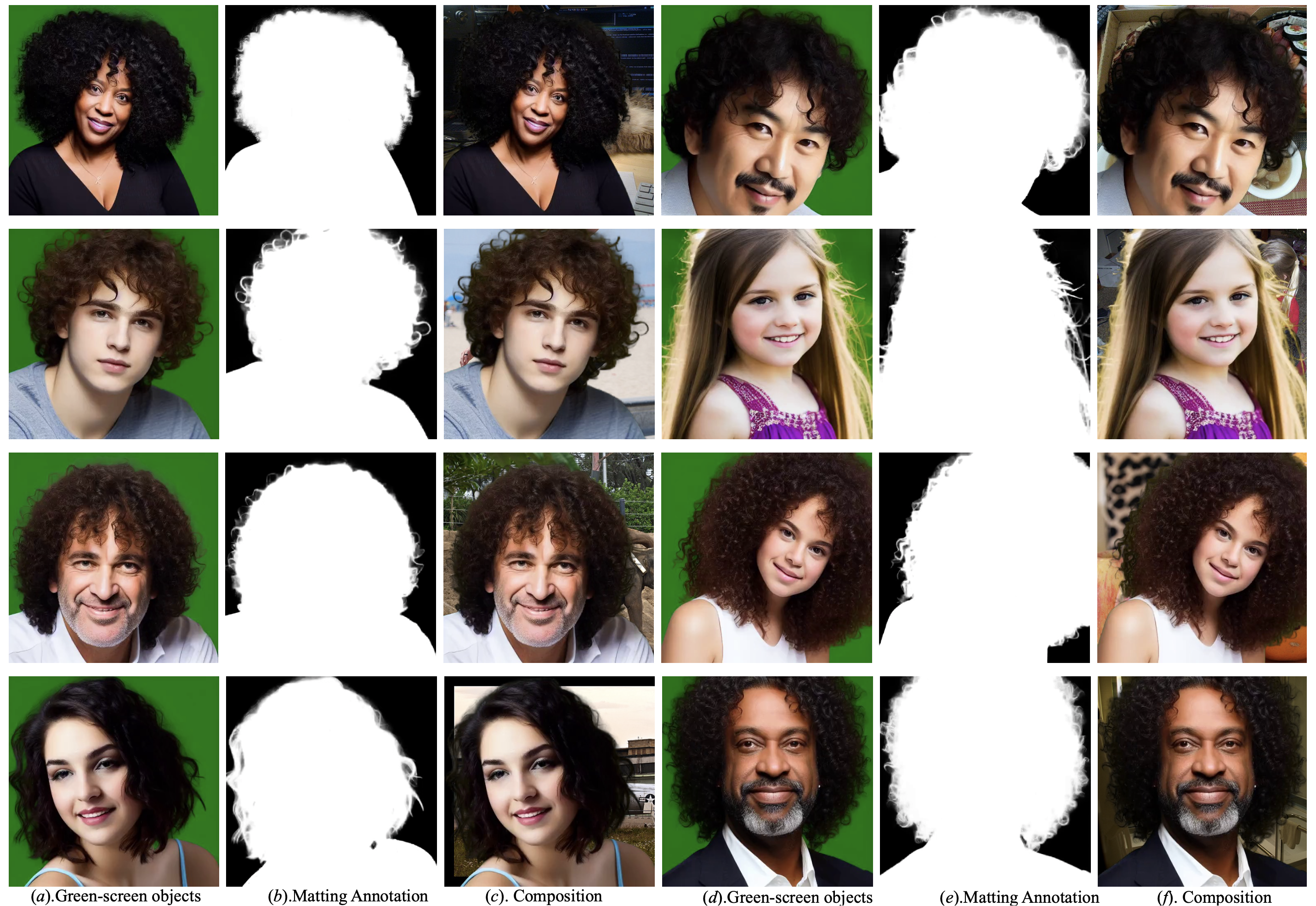

Due to the difficulty and labor-consuming nature of getting highly accurate or matting annotations, there only exists a limited amount of highly accurate labels available to the public. To tackle this challenge, we propose a DiffuMatting which inherits the strong Everything generation ability of diffusion and endows the power of `matting anything'. Our DiffuMatting can 1). act as an anything matting factory with high accurate annotations 2). be well-compatible with community LoRAs or various conditional control approaches to achieve the community-friendly art design and controllable generation. Specifically, inspired by green-screen-matting, we aim to teach the diffusion model to paint on a fixed green screen canvas. To this end, a large-scale green-screen dataset (Green100K) is collected as a training dataset for DiffuMatting. Secondly, a green background control loss is proposed to keep the drawing board as a pure green color to distinguish the foreground and background. To ensure the synthesized object has more edge details, a detailed-enhancement of transition boundary loss is proposed as a guideline to generate objects with more complicated edge structures. Aiming to simultaneously generate the object and its matting annotation, we build a matting head to make a green-color removal in the latent space of the VAE decoder. Our DiffuMatting shows several potential applications (e.g., matting-data generator, community-friendly art design and controllable generation). As a matting-data generator, DiffuMatting synthesizes general object and portrait matting sets, effectively reducing the relative MSE error by 15.4% in General Object Matting and 11.4% in Portrait Matting tasks.

@article{hu2024diffumatting,

title={DiffuMatting: Synthesizing Arbitrary Objects with Matting-level Annotation},

author={Hu, Xiaobin and Peng, Xu and Luo, Donghao and Ji, Xiaozhong and Peng, Jinlong and Jiang, Zhengkai and Zhang, Jiangning and Jin, Taisong and Wang, Chengjie and Ji, Rongrong},

journal={arXiv preprint arXiv:2403.06168},

year={2024}

}